Screw Hashbangs: Building the Ultimate Infinite Scroll

I’m just a student in a field unrelated to computer science, but I’ve been coding for years as a hobby. So, when I saw the current state of infinite scroll, I thought perhaps I could do something to improve it. I’d like to share what I came up with.

(Demo: The impatient can just try it out by scrolling+navigating away+using the back button on the front page of my site. Works in current versions of Safari, Chrome, Firefox.)

Hashbangs Lie

Anybody that has even casually coded JavaScript over the past two years can tell you the story: Google proposed using the hashbang (#!) to enable stateful, crawlable AJAX pages. This proposal caught on. But at its root, the hashbang is a messy solution to a difficult problem: JavaScript is capable of creating an entire application, but browsers are still gaining the ability to update the URLs in their address bars to represent application state. (IE’s progress in this regard is abysmal and inexcusable).

Unfortunately, hashbangs are the dirt on a clean countertop. We’ve spent years trying to clean up our URLs and make them semantic by managing mod_rewrite, readable slugs, and date formatting. Now, we take a step backward, forcing the average internet user to learn another obscure set of symbols that make URLs harder for them to parse. Hashbangs are pollution.

But the real problem is the lying. Originally, a URL pointed to a resource and the deal between user and browser was this: you asked for something, and it was delivered to you. When that URL had a fragment identifier (e.g. url/#frag_id) at the end, there was still the tacit promise that the fragment existed on the page. But when your URL ends with a hashbang, the terms of the deal between user and browser change dramatically. Indulge me in an analogy.

Hashbangs for Lunch

Let’s say you are at a restaurant. We’ll call it cafe.com. Glancing through the entrées, you decide you’d like a burger, with some ketchup on the side (a truly great burger needs no ketchup… humor me here). “Coming right up” says your server, and leaves to retrieve your order.

Case 1

cafe.com/entrees/burger/ketchup/

If the server accepted your order with a URL sans fragment identifier, then you get everything at once, your ketchup is on the side of your plate, and you can immediately begin enjoying your delicious burger.

Case 2

cafe.com/entrees/burger#ketchup

If the server accepted your order with a URL PLUS fragment identifier, they’ll bring some ketchup to the table. “Where’s my ketchup?” you ask. The waiter helpfully points to the bottle of ketchup hiding behind the napkins. Everyone still gets what they want.

Case 3

cafe.com/#!/entrees/burger/ketchup

The system breaks down with the hashbang-style order. Best case scenario, here’s what happens: your burger arrives without ketchup. “Oh, that’ll be here in a bit” the waiter says, “I’ll make a separate trip to bring it over.” Here, the hashbang was guilty of, essentially, a lie of omission.

But here’s the worst case scenario for #3: you walk into a completely empty room. The waiter awkwardly walks up to you (remember, the room’s empty, you can’t even sit down yet) and takes your order. After staring at the empty room for a while, furniture begins to show up, and the restaurant takes shape. You see the waiter come back with a Weber grill.

“What the heck is going on?”

“We’re assembling it all for you, right here!” the waiter replies. A multitude of trips bring over a cook, the raw ingredients, and the whole darn thing is constructed in front of you. Your reaction is less than favorable: “But I just wanted a burger! Whatever happened to using a kitchen?! All the other restaurants just bring it to me all at once!”

The ketchup is applied for you, with a superfluous and sloppy auto-scrolling mechanism.

I call this “lying” because you don’t exactly get what you requested. You get factories and special features and all this extra stuff.

Read on to see how I applied this lesson to infinite scroll.

What’s OK with ∞ Scroll

An infinitely scrollable page is conceited because it presumes to know what you want. “Oh, you’ve reached the bottom of the page?” it asks… “well, why don’t I show you more?” This facilitates casual browsing and is especially well-suited to image galleries.

The fat footer does not suit my purposes, so I am perfectly FINE with the page assuming you’d like more, making the end of the page some weird variation of one of Zeno’s paradoxes. If you don’t feel comfortable with this or your projects don’t require such behavior, then infinite scrolling isn’t for you. That’s fine. Thanks for reading.

But you might want to hear the real problem…

What’s NOT OK with ∞ Scroll

Paul Irish’s thorough and utilitarian infinite-scroll.com starts to get at the main problem of tacking on content to the end of your page:

There is no permalink to a given state of the page.

Well, yes. If you only implement infinite scroll halfway. What I wish Mr. Irish would’ve said: FOR GOD’S SAKE, DON’T BREAK THE BACK BUTTON.

Solution

I’ve written an improved infinite scroll (which you can try out on the front page of my site) that does the following:

- Preserves the back button.

- Does NOT use the hashbang, no matter how happy twitter engineers are with it.

- Gives up on infinitely scrolling if (1) is impossible.

- Progressively enhances: when (3) occurs, the user follows a good ol’ hyperlink for more content.

Remember, “If your web application fails in browsers with scripting disabled, Jakob Nielsen’s dog will come to your house and shit on your carpet.”).

I will outline my thought process here, but remember I’m just doing this for a hobby, so should you want to use this, you’ll probably want to recode it yourself. Let’s get started!

URL Design

I had to have a way to represent my page browsing states in a way that was easy to parse with regular expressions yet meaningful to the user. My schema:

load second page:

/page/2/

load 2 pages of results, including page 2, from beginning:

/pages/2/

the same as previous, except page 1 is explicit:

/pages/1-2/

load 2 pages of results, including page 3, excluding page 1:

/pages/2-3/

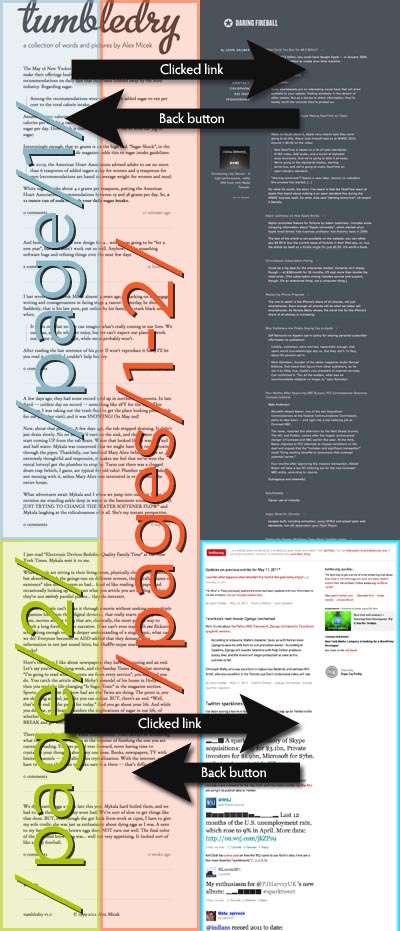

Here’s a helpful illustration:

I’ll annotate that image as we put this together.

Debouncing

I’m sure you’ve noticed that scroll-activated & mouseover JavaScript aren’t always robust. Quickly scroll to the bottom, then back to the top or mouseover-mouseout very fast, and suddenly JS fails to recognize your true intent. Things get stuck on or off… it’s a disaster.

In these situations, you should always debounce:

Debouncing ensures that exactly one signal is sent for an event that may be happening several times — or even several hundreds of times over an extended period. As long as the events are occurring fast enough to happen at least once in every detection period, the signal will not be sent!

I use the jQuery doTimeout plugin. Now that we can reliably detect scrolling to the bottom of the page, we begin the fun stuff.

Adding a Page

jQuery makes it simple (fun?) to extract the URL for the next page from a link I’ve placed at the bottom of the current page. Using the example image from above, let’s say we’re on page/1/. We scroll to the bottom, our debounced script detects this, and AJAX fires to load page/2. The page/2 URL is contained within the “↓ More” link at the bottom of the page. The AJAX result is appended to the page and the “More” link hidden.

Done!

Not if you want your user to enjoy using your page. Two problems:

- Your user is marooned on a giant, growing page. They see something cool and copy the current URL. Their friend clicks the URL and has NO IDEA what is going on.

- Your user clicks a link that interests them. “Cool!” they say, “I’ll just click the back button and keep browsing where I left off!”

*Clicks back button*.

And now they’re stuck at the very first page, having no idea how to get to where they were.

Both cases result in confusion — you’ve abandoned your user in the name of a cool way to add new content! No no no no. Repeat after me: “I must update the address bar to reflect the new state of the page.”

Updating the Address Bar

This is where you and I may differ — I will ONLY use history.pushState/replaceState to update the address bar. I don’t care how many libraries have been written to ease the process of falling back to hashbang-preservation of application state. I refuse to use hashbangs (Cf. the intro to this post!), and I wrote my infinite scroll script to take absolutely no action if history.pushState is not supported. (A nice detection of history support, borrowed from the excellent Modernizr: return !!(window.history && history.pushState);).

Now, I have the luxury of using JavaScript simply as a progressive enhancement; thus, I prefer to revoke functionality instead of spending days of my life working around old browsers. Again, I understand you may not have this luxury, and must use hashbangs for your project. Godspeed.

For those of you still left, put on your regex pants, here we go. I wrote a potent little regular expression to parse URLs of the schema described above. Here’s the unescaped version:

/pages?/([0-9]+)-?[0-9]*/?$

This regular expression is run against up to two strings:

- BROWSER URL (

window.location.href), two variations:

/page/xx/

or this:

/pages/xx-yy/ - The “↓ More” link at the bottom of the page:

/category/page/zz/

Using TWO possible sources for page information makes the script robust, always able to determine how to update the URL. It works like this:

- Load tumbledry.org/

- Scroll. Detect the “↓ More” link and concatenate its contents to the current page. Parse the “↓ More” link using the regex above, and update the URL to

tumbledry.org/pages/1-2/ - Scroll. Detect the “↓ More” link and concatenate its contents to the current page. Parse the current address bar link using the regex above, and update the URL to

tumbledry.org/pages/1-3/ - Repeat (3).

Now that we are updating the URL to represent the state in an honest way, the user gets to navigate without jarring changes to their page location. I’ll explain below.

Handling the Back Button for Inter-Page Navigation

The user can freely click links and hit back to the infinitely scrolling page without worrying about losing their place:

The first set of arrows should be self-explanatory — just your usual browsing. The second set of arrows illustrates how an intelligent infinite scroll can update the address bar URL. When the green area is tacked on to the blue, the script updates the URL to the red area: (/pages/1-2/); this reflects the state of the page. Now, when the user hits the back button, they end up exactly where they were on the page. Why? Because the URL they are navigating back to (illustrated in red) loads all the content the they were viewing (illustrated in blue and green).

Handling the Back Button for Intra-Page Navigation

Note: I originally thought that this behavior would be helpful rather than hurtful. Judging from the comments, I’ve learned otherwise. Since then, I’ve disabled this type of back button handling, and reinstated traditional back button behavior.

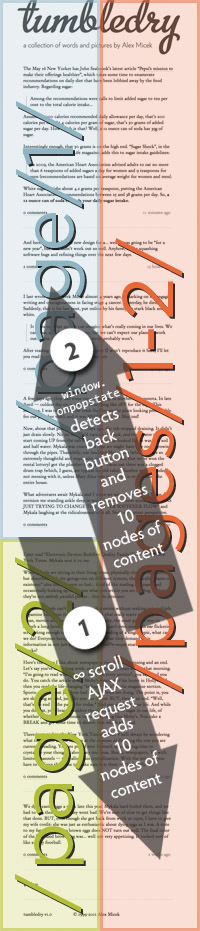

There’s one other exciting application of this address bar URL-updating we’ve been doing. Using window.onpopstate to detect a back-button press, the infinitely scrollable page can move backwards through the history stack, and remove nodes of content accordingly.

An interesting wrinkle: I’m using about a 200ms delay between the user hitting the bottom of the page and the AJAX firing to retrieve the next page. So, when the user hits back and the nodes are removed, they end up at the bottom of the last page, leaving them about 200ms to scroll up out of the target zone. So, using the window.onpopstate function, I temporarily modify the delay to 3000ms, giving the user time to scroll up and out of the target zone.

By adding and refining this popstate detection, the user gets a pretty darn good experience:

- linkable pages without an overwhelming number of items

- predictable forward and backward button behavior

- no need to keep clicking “next” or “more”

Known Issues

- Because

/page/1/has some of the same information as/pages/1-2/, my content management system ends up caching some duplicate information. This could be mitigated on the back-end by having the system intelligently assemble/pages/1-2/from already cached items, but given the small scope of my site, it’s not necessary. - If I navigate away from

/pages/1-2/and then come back, my saved value of the number of nodes previously added is lost. So, whenwindow.onpopstatefires, my code must make an educated guess about the number of nodes removed. This could be fixed by using local storage to save an array of nodes added and removed. - The entire purpose of updating the URL to

/pages/1-2/is to put the user precisely at their previous position on the page. This system breaks down if the user pops open some inline comment threads, navigates away, and then returns. I did not see a semantic way of saving this comment thread open/close information in the URL, so I didn’t bother creating a system to save this particular state. Again, some local storage could be used to save which comment threads were open, and pop them open again when the user returns to the page.

Enhancements

By caching the nodes added and removed, intra-page back button use followed by scrolling could be done without any extraneous HTTP requests.

Conclusions

I stubbornly insist that my JavaScript will only give you infinite scroll if your browser supports the event handlers and objects required to give the best experience.

That way, I am able to ensure that infinite scroll is all gain and no loss.

If for some reason you really want to read my source, you can do so:

Comments welcome.

Comments

Richard Roche

Very interesting post. This is the stuff that impresses me, because my “coding” skills consist of googling tutorials and modifying them to fit my needs.

Ben

This is fantastic.

Jack

Awesome implementation and a wonderful showcase of progressive enhancement. Good semantic links, and most importantly, the ability to use normal web behaviors (something I’ve lost several arguments over the importance of.)

Jeff Minard +1

Sounds really good, but there’s one flaw — the /pages/1-4 type link doesn’t last over time. I mean, the content of “page one” in blogs is chronologically reversed. So as time goes on and you post more, the content of page one gets pushed further and further off to page 2 and beyond.

It would be less cool looking, but more accurate, to load the page as /posts/293-283, wherein those are the postID numbers (or whatever primary key you use). That way, the link will stay accurate forever.

Otherwise, really nice implementation, I like it a lot.

Jon

Hi, love the analogy, great post.

On the subject of infinite scrolls, like hashbangs themselves I think they subvert how you’d intuitively expect a web page to behave (not just based on experience, but on what the browser UI promises you).

Eg. I dragged the scrollbar to the base, hoping to see an RSS icon, but the footer was immediately moved on. Infinite scroll makes any kind of footer worthless, you’re chasing it like one of those ‘click on me for a pay rise’ buttons that move when you mouse near them.

Markus +1

Thank you Alex for taking the time to share this with the community.

You are very well appreciated, Markus

Ricardo

Don’t forget to add a to all possible states of the page, so you don’t end up splitting/duplicating your content over lots of URLs.

lol +2

I still hate infinite scroll

John

You should put this on github.

James Darpinian

Really cool; way better than any other infinite scroll I’ve seen. However, URLs like /pages/1-40 seem problematic because the pages will get huge. Seems like you need a way to request just the articles for the 40th page while leaving space for 1-39 to be loaded on demand if the user scrolls up. Unfortunately you can’ t know exactly how tall 1-39 will be until the browser renders them, but perhaps that can be worked around with some clever JavaScript that tweaks the scroll position when stuff loads.

DrPizza +1

Your implementation breaks the browser—or at least, this browser (Chrome dev, so probably about version 13)—in a couple of unpleasant ways.

I’ll just say up-front that I do like that your script means I can click to an offsite link and then hit back and not lose my place. That does remedy perhaps the biggest sin of infinite scrolling pages, and is a laudable ideal.

But the other broken stuff is much less pleasant.

I think it’s conceptually flawed to inject new pages into my history just from scrolling down. I visit your site; I page down a few times, seamlessly and automatically loading new content. I then hit the back button, hoping to be taken to wherever I was before visiting your blog. But instead, I just scroll up the page a little. I hit back again; the same thing happens. It is not until I have hit back a half dozen or so times—returning to the bare URL with no /pages/ additions—that my back button finally works.

This is broken. I did not click a bunch of links on your site; I just scrolled. You should not act as if I had navigated several layers deep, because I did not. I realize that you engineered this behaviour deliberately; I am just not sure why. It is expectation breaking. If you are going to make page loads seamless and transparent, they should not make their presence felt when I click the back button.

Secondly, you break the forward button. I visit your home page, and scroll down several pages. I press back a few times, then I press forward once, and boom, I have no more forward history.

I assume that the history modification API is to blame, but it is not pleasant behaviour. Clicking a link should clobber my forward history, but merely using the back and forward buttons should not.

Alexander Micek

Thanks for the responses, folks.

@Jeff Minard — I understand your concern with preserving exactly what the URL points to by using /posts/293-283. Unfortunately, if posts 294, 295, 296 are deleted, THAT link won’t stay accurate forever. I do think your solution is more robust, though.

@James Darpinian — those URLs do, in fact, generate rather huge pages. I haven’t figured out a good way to solve the problem. For what it’s worth, I did add an upper limit on the number of pages my backend code will output at once. That way, some joker can’t request pages/1-2000/ and try to load my entire site in one page.

@DrPizza — very well thought-out response; I appreciate your taking the time! I actually thought about exactly the issue you are experiencing — that of trying to back-button out to a previous website when you are a few pages into infinite scrolling on mine. I had thought I could add a little JavaScript to detect if the back button was rapidly pressed twice (i.e. “GET ME OUT OF HERE”) and delete the entire <tumbledry.org> pushState history stack, allowing the user to get back to whatever referring page had landed them here.

I actually didn’t write that because I thought nobody would care enough to notice! I should have been more optimistic about this code attracting enough attention for a proper review and test! Thanks again for your response — your critiques are quite valid.

KevinH

Not sure I can agree that the desired back button behavior after scrolling down through three page-state transitions should be to show me each of those previous states again. This seems to be a tactic just to make it harder for me to leave your site. If I get linked to your site from a blog, then read a couple days worth of your posts (getting the infinite scroll behavior without even really being aware of it) and then try to go back to the page that sent me here, it should take me back!

Hutch

Have you thought about using history.replaceState() instead of pushState, since you’re not actually navigating to a different page, it makes more sense as others have mentioned.

Zapporo

Nice article! How would you approach this use case: I arrive on /page/1/, and want to jump to the middle or end of the document (last photo, first tweet). I run into this with twitter where I am trying to find a tweet I saw a few days ago. I wish they had paging so I could skip around. I find the scroll wait for load, scroll wait for load repetition really annoying, and it ends up being a net negative experience even though it is an edge case.

Another case would be an active forum that might have 500 posts in a thread. I often want to jump from page 1, to the middle, to the end to quickly get a send of the development of the discussion.

Paul Irish

Niiice work! It has been a long time since I looked at infinite scroll and discussed a proper implementation. I think your critiques are well founded and your solutions wise.

Anyway I updated http://www.infinite-scroll.com with what you wish I said. ♥

Interested

What is the license on this code?

Giovanni Cappellotto +2

I was talking about infite scrolling pros and cons last week with @bugant and your solution takes this pagination method to the next step, thanks for sharing!

Daniel Swiecki

Alex, in response to DrPizza’s criticism of having multiple history states for your website, thus preventing users from reaching would it be inefficient to remove the current state from the stack each time right before a new state is pushed, so that the stack size is at most 1? I think this could be done in O(1) time, but I may be missing something… Is it even possible to have an empty history stack with the history modification API?

Sorry, I don’t think I articulated that very well. Thanks anyways for the great post.

Luke Shumard +1

I agree with all of the points you’re making, and as an FYI, almost all of them are already in the 2.0 beta of the Infinite Scroll plugin. https://github.com/paulirish/infinite-scroll

Dave Paola

Thanks for this. Rockin. This is obviously a more general problem that you outline, the URL state reflection. I’m currently ripping apart some code that stores things in the session that really should be URL parameters (yuck). Everyone keeps recommending hashbangs and now I have some ammunition.

But…your solution still doesn’t make your pages crawlable by google, right? Which was the original goal of #!? (#!? is fun to type)

Alexander Micek

@Paul Irish — thanks for the update on your infinite scroll page! Your site is how I learned about the name and original implementation for the technique. Thanks for stopping by!

@Dave Paola: well, it depends on how we define crawlable. Because distinct links (

/page/1//page/2/etc.) exist at the bottom of each page, then all of the content is crawlable. All of the states (/pages/1-3/), however, are not crawlable. I’m comfortable with the former definition, rather than the latter, for my use.@Luke Shumard: thanks for pointing that out to me. Great to see the project moving forward!

@Daniel Swiecki, I think you are describing the behavior of

history.replaceState()(Cf. Hutch’s comment).@Zapporo: if you kept track of the nodes added (trivial with jQuery), and measured their vertical height (perhaps trivial, but I don’t know), then you could add the pagination shortcuts you propose (e.g. skip forward a “page” within the current page). In that instance, you could use a fragment identifier in an honest way to represent the state after you used the shortcut. Something like:

/pages/1-5#p2— nice idea! The hardest part of implementation would probably writing (or finding a library) for reliable cross-browser keypress detection.License

I hadn’t ever thought of a license, since this is really more a technique than “software”. Anyhow, let’s go with an MIT License.

Miguel

It’s just and idea but would it be possible to change the way of presenting data? Instead organizing it among “pages”, to present them directly as “posts” (or whatever title you might prefer). I mean when you start at the top, the browser adds an anchor to the first post’s ID (/blog/#trip-to-spain) and, as you scroll down, it changes as you hover each post: /blog/#pic-of-my-son -> /blog/#new-job, etc. Assuming you don’t store them on browser’s history, at the very instant the user would like to link the web URI, he/she would have the “exact” position for his friend to visit, instead the long list.

Finally, as the user scrolls up or down, the browser adds more posts.

PS: Wouldn’t it be preferable to put everything inside a div with overflow property, so a footer is always visible?

Alexander Micek

I like your idea, Miguel. Provided that posts weren’t deleted, I think your semantic anchors could work quite nicely. However, they would have to be paired with the

pages/x-y/structure in order for them to function without JavaScript (a key part of progressive enhancement).It would be difficult to train people to think of things this way. Given your method, the user could just copy the URL when they had scrolled to what they were interested in. Right now, users are accustomed to having to find a permalink to the individual post of interest. And of course, not all websites would put this together the same way, so users would most likely revert to their old behavior of looking for that permalink. However, I do think this is a good step in terms of giving the user ‘landmarks’ reflected by the URL as they scroll a long page.

I understand your note on the footer, but the stuff I have in there just does not need to be visible. I’d hate it if my books had copyright notices at the bottom of every page, so I try to avoid it on my websites. However, I can see how some people would want their footer to be visible all the time — in those instances, a “section” tag w/overflow property would be a good way to solve the problem.